When a browser stops being just a viewport and becomes a collaborator, we cross into a new terrain of risk. With the release of ChatGPT Atlas by OpenAI and the growing class of agentic browsers like Comet by Perplexity, your corporate web-surfing tool is no longer passive. It’s active. These browsers embed an LLM-driven assistant inside the browser itself, offering not only summarization but also action like opening pages, interacting with apps, or taking commands.

From a CISO standpoint, this means your attacker’s playing field just got a lot bigger.

Key insights

Agentic browsers raise numerous security concerns. CISOs must consider the following:

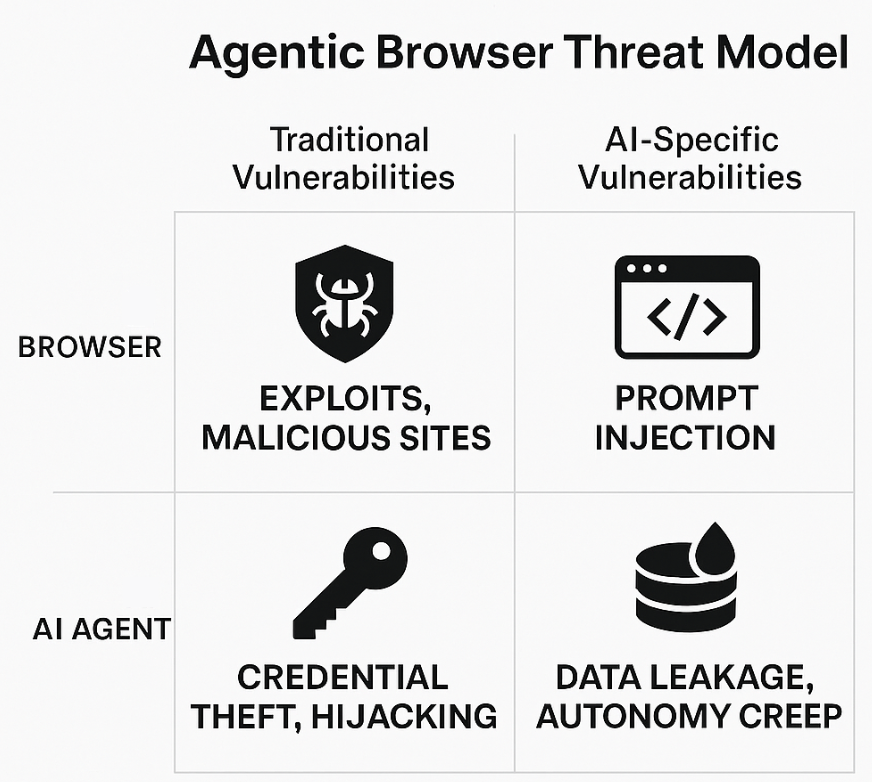

- Two converging risk domains: Agentic browsers inherit all the legacy browser attack surfaces (CVE exploits, malicious extensions) and introduce new AI-specific vulnerabilities like prompt injection and model manipulation.

- Expanded attack surface: By merging browsing, reasoning, and autonomous action, these tools centralize risks that were once isolated across multiple applications.

- Action without oversight: AI autonomy can blur the human-in-the-loop boundary, allowing compromised agents to execute harmful tasks within authenticated sessions.

- Data exposure risks: Embedded LLMs may inadvertently store or transmit sensitive data during context accumulation, prompting stricter DLP and segmentation policies.

- Governance must evolve: Existing browser security frameworks aren’t enough. CISOs must integrate AI-specific policies, telemetry, and user training into governance programs.

- Controlled adoption is essential: Safe rollout requires sandboxed pilots, privilege restrictions on agent actions, continuous monitoring, and policy alignment before scaling enterprise use.

Why are agentic browsers becoming popular?

There are several factors behind the rise of the agentic browser:

- Efficiency demands: Employees want one place to research, draft, schedule, run workflows. They don’t want to bounce between separate AI tools, browsers, SaaS apps.

- Democratization of AI: Not every employee is an AI power-user. Agentic browsers lower the barrier to “tell it what I want done.”

- Vendor consolidation: Rather than managing multiple plugins, extensions, AI tools, you get one “smart browser” surface.

But convenience equals exposure. We are shifting from browser + separate AI tool + apps to agentic browser = browser + AI agent + action capability. That fusion gives adversaries one large attack surface instead of three.

Question: How ready is your organization for a browsing tool that can take action—and not just show pages—on behalf of the user?

Two threat tracks: legacy browser risks vs. AI-agentic risks

There are two threat tracks that you need to be concerned about: classic browser vulnerabilities and agentic AI vulnerabilities.

Classic browser vulnerabilities

Don’t be fooled. Even before the AI agent angle, many threats still apply to browsers, including the following:

- Exploits of browser code, extensions or plugins (CVE-type bugs).

- Malicious websites delivering drive-by downloads, watering-hole attacks.

- Credential capture, session hijacking, clipboard injection attacks (recently seen in Atlas).

- Privilege escalation via browser access to local resources.

In short, the agentic browser is still a browser. All the old mechanisms apply, and you must harden it like you would any endpoint or browser environment.

Question: Which of your existing browser security controls (sandboxing, patching, extensions monitoring) can you immediately apply to agentic browsers?

AI-specific vulnerabilities with agentic browsers

Agentic browsers add a second vector: risks inherent to the AI-agent layer. These are not simply browser flaws. They are new failure modes introduced by language models + actions + automation. Consider the following:

- Indirect prompt injection: A webpage embeds hidden instructions (invisible text, images, code) which the AI-agent interprets as commands. Recent research showed how browsers like Comet and others were vulnerable to exactly this.

- Action-taking by the agent under elevated privileges: When the AI has the ability to click, submit forms, or navigate as the user, the risk is magnified. One research paper pointed out that once the agent acts with the user’s auth state, traditional web security assumptions break down.

- Supply-chain/back-door risks to LLM-agent systems: When the model or training pipeline is compromised or poisoned, the agent may act maliciously from within. (See: back-door triggers in the data/pipeline.)

- Data-leakage and autonomy creep: The agent might access, store, or transmit sensitive data (especially given context accumulation). If granted autonomous permissions, it could execute beyond intended scope.

- Hybrid threat surface: Because the system is “browser + AI agent + action engine,” you need to defend multiple domains—web security, AI model security, automation logic—simultaneously.

Thus, the security mindset must expand. You should be thinking not only “can someone exploit this browser plugin?” but also “can someone manipulate what the AI thinks, the decisions it makes, and its permitted actions?”

Question: Have you mapped which agentic browser features in your estate allow actions (not just reading) and what guardrails you have against those?

Strategic playbook: how to safely introduce agentic browsers

As CISO, you’re not banning everything. You’re controlling the rollout while the technology matures. Here’s a pragmatic approach:

- Start with a controlled rollout

Begin with a small, low-risk cohort (e.g., marketing, research) using the agentic browser in a sandbox environment. Monitor closely. Ensure telemetry, logging, and visibility from day one. - Restrict autonomous modes

Treat “agent action” capabilities like privileged execution. Disable or limit fully autonomous workflows until you’ve proven controls. Require explicit confirmation for high-risk actions (e.g., submitting forms, executing flows, connecting to internal systems). - Extend existing browser/AI controls into this realm

- Apply Zero Trust principles: enforce least privilege and minimize agentic browser permissions.

- Data Loss Prevention: block regulated data (PII, PHI, financials) from being fed into agent prompts or being extracted by the agent.

- Authentication & identity: link browser-agent sessions to enterprise SSO, enforce strong MFA on actions.

- Separate “agentic browsing” from “sensitive browsing”: don’t let high-privilege sessions run in the same context as the agent.

- Policy and governance refresh

Update acceptable-use policies to clarify:- When agentic browser use is authorized.

- Which workflows are allowed to be automated and which require human oversight.

- Logging/telemetry expectations: which sessions will be recorded, reviewed, audited.

- User training for new failure modes

Your users must understand:- What agentic permission means (it’s not just a ‘smart tab’).

- How to recognize when the browser agent acts unexpectedly.

- The concept of prompt hygiene (what they paste, what they ask, what the agent’s context is).

- How to report misbehavior of the agent, just like they do phishing.

- What agentic permission means (it’s not just a ‘smart tab’).

- Continuous monitoring and telemetry fusion

Feed agent-browser events into your SIEM/XDR: agent activations, session logs, large content uploads, unusual cross-domain navigation. Review anomalies. This is an emerging area—submit agent-browser logs into your governance/AI-risk committee.

Question: Which of these six controls are you missing today, and which is your top priority to implement within the next 90 days?

Final word: embrace but don’t be fooled

Agentic browsers represent a paradigm shift. Embedding AI into the browser means one fewer tool hopping around, but it also means one broader attack surface. The tension is real: you must not hamper productivity by reflexively blocking innovation, but you also must not let guardrails lag behind.

My advice: act like you did during the early days of mobile adoption or cloud SaaS proliferation—pilot, monitor, govern. The goal isn’t to have an airtight, perfect deployment on day one. It’s to bring the tech into your environment in a risk-aware way that protects your people, your data, and your company.

Remind yourself that giving a tool the right to “do for me” means also giving an adversary more potential if that tool is compromised or misused. Keep human oversight, keep logs, keep control. Innovation doesn’t have to come with chaos.

Question: When you look ahead 12 months, how will you know your organization handled the agentic browser rollout successfully rather than having it become a rogue vulnerability vector?

How TrojAI can help

TrojAI delivers security for AI. Our mission is to enable the secure rollout of AI in the enterprise. Our comprehensive security platform for AI protects AI models, applications, and agents. Our best-in-class platform empowers enterprises to safeguard AI systems both at build time and run time. TrojAI Detect automatically red teams AI models, safeguarding model behavior and delivering remediation guidance at build time. TrojAI Defend is an AI application firewall that protects enterprises from real-time threats at run time.

By assessing the risk of AI model behavior during the model development lifecycle and protecting it at run time, we deliver comprehensive security for your AI models and applications.

Want to learn more about how TrojAI secures the largest enterprises globally with a highly scalable, performant, and extensible solution?

.png)